Code Blindness

How to prevent appalling software quality when using coding agents

I am still not sure on how to get the human out of the loop when working with coding agents. My personal workflow remains human-driven, built around a planning and implementation loop.

The whole hype around vibe coding and context engineering, created an unquestionable downward pressure on both code quality and product quality.

Keeping coding agent drivers responsible - the necessity of culture

Source code has become a lot cheaper to produce, but the more complex and intricate the project becomes, the harder it gets to make the coding agent behave well.

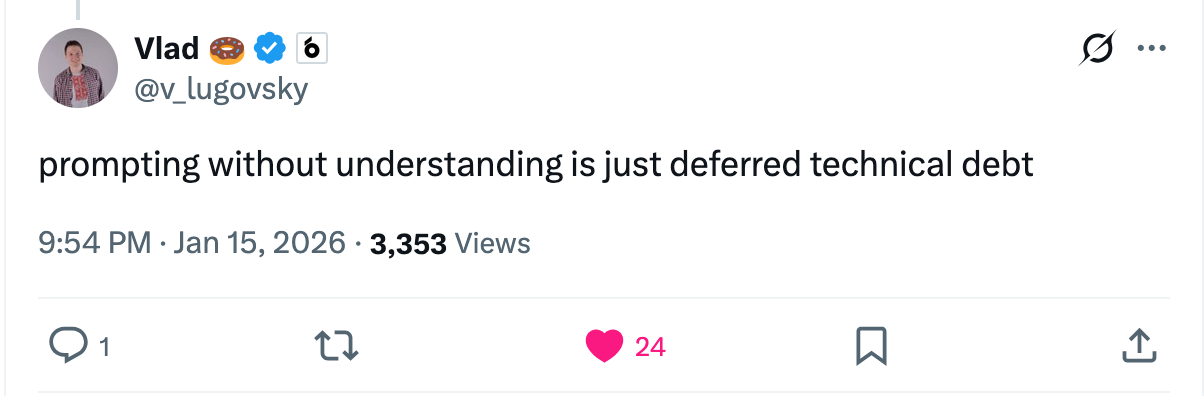

Whether it’s context window limitations, missing guard rails in the project, or simply total nonsense the model produces, it has never been more important to not rely on green unit tests and to understand the source code deeply!

I lost count of generated unit tests I’ve seen that merely imiate the code they are supposed to test. Multiple time, they only tested mock behavior. I never committed such code, but I still had to fix it.

Cheap code, expensive mistakes

At first glance, this may sound like a personal workflow issue. But when looking at open source projects, it becomes clear that this is a broader pattern: coding agents tend to generate bad code often.

In May 2025, David Gerard asked in an article why are there no AI open source contributions.

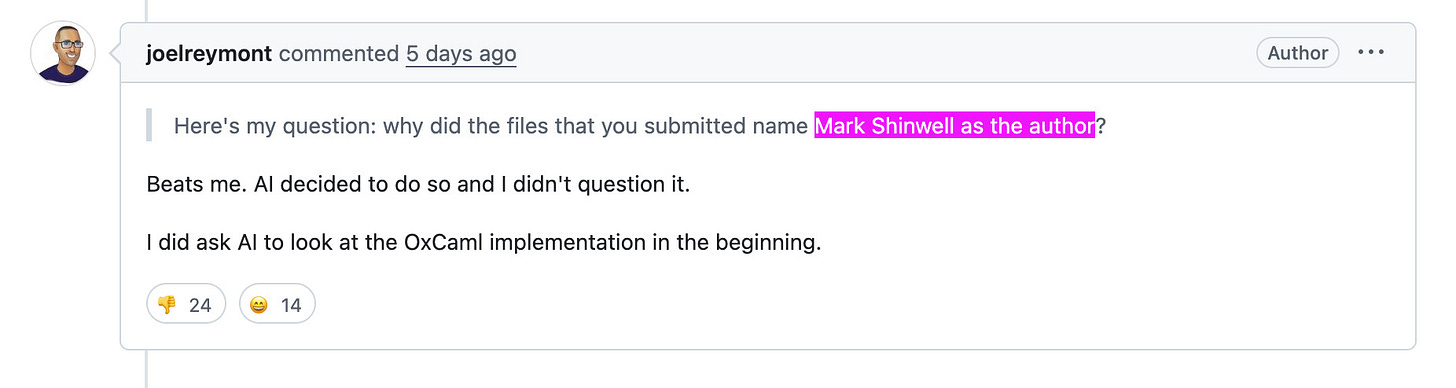

Thanks to the transparency of Github we can look at the reason in real time.

The misguided notion that “code is cheap now” causes naïve or inexperienced developers to ignore obvious gaps in their understanding of both the project and the generated code. They commit and push changes that create the illusion of progress. Even when destroying functional code by making their coding agents generate broken “fixes” or create pull-requests for thousands of lines of code, which are not even reviewed. When asked about weird attributions or logic, reply with explanations that they did not write a single line of code and make AI generated replies. The incompetence in fixing simple issues and the hacky AI workarounds are sold as great achievements.

Our teams and organizations must not allow that! Keep every developer on the team accountable.

It is no coincidence that open source projects respond by introducing authentication or stricter contributor verification.

Accountable developers and bad code

While this should be obvious, I wanted to use this as introduction to another problem I noticed: Code Blindness.

Code blindness describes the phenomenon of committing sub-par code that seems acceptable at the time, while just 24 hours later obvious issues stand out.

Coding agents often generate extremely large diffs. The sheer volume creates real cognitive overload and makes it difficult to spot subtle bugs, broken assumptions or architectural regressions.

The only reliable way of quality control is a strict code-review by a second pair of eyes.

Practical guardrails I use myself

To mitigate the risk of committing bad code, I introduced a few rules for myself:

1. Use planning excessively and use file references and concrete code examples as much as possible.

1. Read every line of code. Always inspect surrounding code and understand how the new code interfaces with existing logic.

2. Introduce time separation. If it’s a bigger change or multi-day work, I review the code the next day before continuing.

Tooling, alienation, and discipline

In 2005, Charles Petzold wrote and spoke about Does Visual Studio Rot the Mind? , reflecting on how tooling shapes and even intrudes the way software engineers think.

Coding agents are a far more intrusive tool than IDEs ever were. That makes discipline and self-awareness not optional, but essential.

Without them, developers become alienated from their own work; shipping code they do not fully understand, cannot defend, and no longer feel responsible for, ultimately dooming both the product and the business it’s meant to drive.